Memory Issues

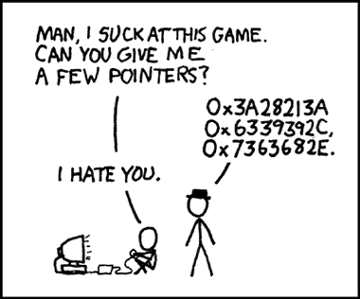

Oh right, I forgot, I have a blog. Just kidding. But I am back - a little older, a little wiser, and with a couple pointers on the importance of memory in a data science context.

Don't get it? I'll explain, but don't worry about it too much. To a programmer, pointers are basically addresses in memory which come in hexadecimal form as you can see above. Pointers point to some piece of data that is already stored. You might want to use pointers when you have several large chunks of data that need to be grouped together. Instead of copying all the chunks into nearby locations, you could store the addresses of the original chunks of data in consecutive locations in memory.

Many programming languages abstract much of the joys of pointers and memory management away from the programmer. C++ is not one of those languages. For better or worse, I use C++ for most of my long-term data analysis code. Being able to fiddle with how things are stored is one of many reasons for this. Most of my reasons have to do with speed. And most of my reasons can probably be debunked, but C++ gives me the greatest sense of control. I hate feeling like I am at the mercy of the programming language.

If you happen to remember from last post, I mentioned that I am working toward being able to build an image classifier and more. The proven tool for image classification is the Convolutional Neural Network. (Yeah, I know. Sounds convoluted. Hillarious.) Anyway, back in early December I finally managed to focus enough on this project to prove that the three basic building blocks for CNNs that I built were working. The calculus for these things is insane(ly awesome), but that's a story for another time. Upon proving its mathematical accuracy, I hooked it all up and let it loose on the classic MNIST handwritten digit dataset. And though it appeared to be working, it was painfully slow.

Based on some very rough estimations, I would guess that my code was approximately 20,000 times slower than the code Google provides to do the same type of thing (TensorFlow). Google has 20,000 times more smart minds at its disposal though so I counted it as a win and gave up. Just kidding. 20,000 is a big number so clearly I was doing something very wrong. The biggest single issue was the way in which the image data was stored. I won't explain why, but essentially each image was stored in 784 different locations in memory. This meant that my CNN couldn't take advantage of caching. Caches are the brilliant result of fast memory being expensive. Computers will give you a little bit of this fast expensive memory that can be used to store data that has been recently accessed or is near data that has been recently accessed. If each image is scattered in 784 different memory locations, caches won't be able to take advantage of the idea of spatial locality - that you are likely to need data next to data you already accessed. So this means my CNN had to resort to using slower memory much more often.

Long story short, I spent about a month figuring out how to store all this data together. Doing so at least cut my code's runtime in half. To further improve the speed I found common pieces of code that were duplicating chunks of data rather than using the already existing chunk. After all this work, I estimate that I am still quite a bit slower than Google's code, but by maybe a single or double digit factor instead.

So pointer #1 about memory is to keep related data together as much as possible. Part of what makes a CNN special is that it cares about pixels that are next to each other. As a result it will definitely be making lots of accesses to neighborhoods of pixel values all at once. If the data isn't stored well, you will pay lots of time for it.

Pointer #2 about memory is, don't forget stuff. After the long adventure of fixing the memory of my structures used to hold all my image data, I forgot something very important that cost me a week or two of my free time. I thought for sure that I had proven that the math behind my CNN was solid before I embarked on the journey to fix my memory issues. But after getting the main memory issue fixed, I wanted to make sure it didn't somehow affect the math of my actual algorithms. It didn't, but it looked like it did because two pieces of code needed to be commented out to test the mathematical accuracy. I didn't remember this until at least a week of scratching my head and doing lots of tedious, manual calculus.

So basically this post was just one giant excuse as to why it has taken me so long to write a post. But I also want to encourage those interested in data science to consider the merits of a real knowledge of computer science in addition to the math and simple programming knowledge. I know one of the biggest things I appreciate from my education at Taylor is a better understanding of the inner workings of memory and such. I am very self-motivated to learn the things I am interested in. I was never interested in getting in so deep with the memory though, but I was forced to learn it anyway, and I can say now that I really appreciate knowing it.

The good news is that my CNN is working pretty well and is reasonably fast. I have one kink I know of to fix, and then I hope to start showing off with some cool posts. However, don't expect too much very soon. March is creeping up on me. And you know what that means... MARCH MADNESS ALGORITHM!!!