My Maddening March Misfortune

The good news about my poor performance in this year's March Machine Learning Mania competition is that I can share all my secrets without worrying about everybody stealing my ideas. I mean go for it, but you'll still only be 259th out of 442. But before I give away all my secrets, I wanted to talk more generally about the competition and how it works. To be completely honest, doing so poorly in the competition this year was somewhat of a relief to me. I was proud of many of the improvements I made to the algorithm from the previous year where I got 38th out about 600. But I figured I'd do well again this year and consequently have to try to keep everything secret so the competition couldn't steal my brilliant ideas. But since I did poorly, I get to share all those brilliant ideas, and I get to assume that most sane competitors that could have found my blog stopped reading after they saw 259th out of 442. However, sharing my ideas is for another post. I want to give background for the competition first as well as restore confidence in the brilliance of my ideas despite the poor performance in this year's tournament.

Understanding the madness

First, I want to address some misconceptions I feel people get when they hear me talking about the competition. You need to understand that the algorithm I create is not an automatic-bracket-filler-outer. It can be (and is) used to do so, but the competition I partake in is not your typical bracket challenge. If it were, I would take significantly less pride in doing so well in 2016. That said, there's plenty of luck involved. So basically, be super impressed with me for last year's performance and not super unimpressed with me for this year's. Ok, so I'm probably not actually that amazing, but I do want to note that this isn't like winning your company bracket pool in the amount of luck involved (no offense to all you winners).

So if it's not a bracket competition, what is it? Before the games begin, each competitor submits a file with the probability team A beats team B for all possible teams A and B. This includes the four teams that are actually eliminated in the first four play-in games even though those games aren't actaully scored for the competition. So that's 68 teams times 67 other potential opponents (4556 matchups). But that includes each matchup twice so after removing duplicates, you have 2278 probabilities that your algorithm has to come up with. So this means that even if a team you predicted to lose in the first round makes it to the second round, you still have a prediction for the second round. This way your potential for reward/penalty in the later rounds isn't so dependent on the results of the earlier rounds.

Now here we get to an interesting part - how it's scored. Just because I predict team A has a 55% chance of beating team B, doesn't mean I receive no penalty for a team A win. If I want zero penalty when team A wins, I need to be 100% confident that team A will win. And as you might expect, that comes with a hefty penalty if I'm wrong. The following graph should help you visualize this.

The histogram represents what all of the competitors predicted. Columns to the left mean they predicted Team A had a better chance of winning, and columns to the right mean they predicted Team B had a better chance of winning. Then there are two curves that show approximately how much a submission would be penalized. Since only one of the teams would actually win, only one of the curves would actually apply to a submission's score. So if Team A wins, you can see by the blue curve that submissions that had predictions on the right side of the graph will suffer significantly larger penalties than those whose predictions lie on the left side.

So I already mentioned the neat property of not being penalized at all if you are 100% confident Team A will win and it actually happens. But now you can perhaps sort of see what happens if you were 100% confident but it didn't end up happening. This function actually has a vertical asymptote at 0 (or 1 if you're using the mirror of the function). So in theory that means you're penalized infinitely when you're 100% wrong about the winner. In practice, they do actually limit the penalty so that you could still compare people who made these infinite mistakes with one another.

This elegant penalizing function encourages predicting the actual probabilities. For instance, if the tournament consisted of team A playing team B 100 times and you believe team A has a 90% chance of beating team B, you would want to predict 90% for each game to minimize you're overall penalty even though ten games would hurt you significantly. Obviously each team only plays any other team once, but the idea holds due to the large number of games played. Being over-confident will burn you in a select few games, while being under-confident will have the same effect but spread out over many games.

Why 259th then?

Within the leaderboard, they usually have a few different benchmarks for simple prediction algorithms. For instance, one is just picking 50% for every game. In general, you did something wrong if you don't beat the given benchmarks. However, I wanted to make a few different "benchmarks" to compare my algorithm to.

First, since everybody assumes I do (or thinks I should) gamble with my algorithm, I wanted to compare myself with odds I could find on gambling sites. I made a little spreadsheet that would show which games my algorithm would suggest I bet on and then it just assumed I bet $100 on each of those games. After around the first 50 games, I was down about $750 so I stopped recording because it was a pain, and I was pretty sure they had me beat.

Next I wanted to use the predictions FiveThirtyEight, a popular statistical news site owned by ESPN, produces every year. I wasn't sure if it'd be that easy since the predictions presented aren't explicitly predicting who would win a particular matchup. But since they update the predictions every round, you can figure out the implied predictions per matchup. Also they had a handy little csv file with all their probabilities so I didn't have to do much manual work. Using this data, I was able to figure out what FiveThirtyEight's score would be had they entered those probabilities into the competition. Assuming I didn't make any errors along the way, they would have placed around 100th. I found this pretty encouraging, because for one they got to update their predictions every round unlike everyone else. And secondly, they are owned by ESPN and probably have a super rich dataset at their disposal. That said, they are a blog/news site so attracting readers is the primary goal.

And finally, after the competition was over I wanted to check my hypothesis that a lot of the people who beat me were more lucky than good. Even though picking the actual probabilities is encouraged via the function mentioned above, getting lucky with unrealistic probabilities could put you at the top of the leaderboard. While 64 games is a decent sample size, the final submission scores aren't going to exactly represent the algorithms' accuracy. So I just stalked the top 5 leaders' Kaggle profiles to see if they were as successful in their other competitions.

At first I couldn't decide if what I was seeing was encouraging or not. There was a mix of unimpressive and impressive profiles. For a couple of the leaders, this was their first Kaggle competition. It seemed like there might be a case for my algorithm being a quality algorithm despite its poor performance this year. But it also seemed like even if it was, the results from year to year are destined to just fluctuate wildly. But then I looked at Monte's profile.

The only competitions he ever participated in were March Madness competitions. By the looks of his Houston Rockets logo profile picture, this was a real fan of the sport who wasn't half bad at data science. He had competed in four of these competitions. When I saw his rank in each of those competitions, it's safe to say I was impressed. He made the top 1% in two of those years and top 19% and 32% in the other two years. This didn't seem like a fluke that he could do so well year after year after year (after year - to be exact).

So I decided to further stalk this guy, I guess to see if he had a blog or anything about his successes. That's when I found out that Monte McNair is the VP of Basketball Operations for the Houston Rockets. It all made sense now. He wasn't just a fan of the sport, but he might actually know a thing or two about basketball given his day job. Shoutout to you Mr. McNair if you ever happen to find youself in this post. I am thoroughly impressed with your success in these March Madness competitions. Also I hope your okay with me calling you Monte throughout this post :).

After a few minutes of being nerd-struck (the nerd version of starstruck), my giant ego started to reemerge. What's this Monte guy got that I don't have? I could lead the Rockets to success too. Couldn't really be that difficult. So I was inspired for the first time ever to do one of those hypothesis tests they love to teach in stats class. This seemed like the perfect opportunity for a hypothesis test since I had a couple samples of data which I could get standard deviations for. Not to mention it seemed like a good time to use a statistical method used so commonly to mislead people.

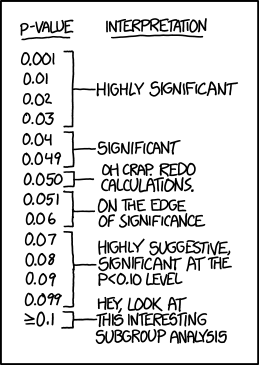

So I tried to set everything up in the most advantageous way possible. My null hypothesis was that I am just as smart as Monte (or in math speak: the true mean score of my algorithm minus the true mean score of Monte's algorithm equals zero). The alternative hypothesis is that Monte is smarter than me (math speak: my true mean minus his true mean is greater than zero). I chose 5% as the threshold probability which I would reject the null hypothesis at. No, I definitely wasn't planning on changing the threshold to my advantage if I needed to. Hey, check out this post's word from xkcd.

I'm not going to do all the math here, but if my calculations are correct, there is approximately a 14% chance that we'd see my score be so much worse than Monte's assuming the null hypothesis (that I'm as smart as Monte). Since that isn't less than the threshold of 5%, we fail to reject the null hypothesis. YES!!! Normally, you actually want to reject the null hypothesis (for instance, doing so might mean that a new pill "is" more beneficial than a placebo). But in this case, rejecting the null hypothesis would basically be saying that we don't think I could possibly be as smart as Monte. So despite my placing 259th, I still managed to feed my ego.

In all seriousness, I was encouraged mostly by the fact that someone who clearly knows what he's doing can so consistently do well in these competitions. Even though Monte McNair probably has a few advantages over me -- like more/better data and being smarter than me (despite my hypothesis test saying he might not be) -- knowing relatively consistent success is possible is good to know. Next post I'll probably share a few of my secrets with everybody.